Access to Collaboration Site and Physics Results

Updates tagged: “computing”

Needle in a haystack

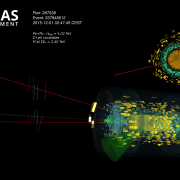

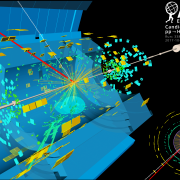

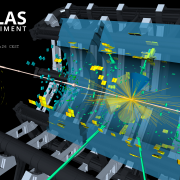

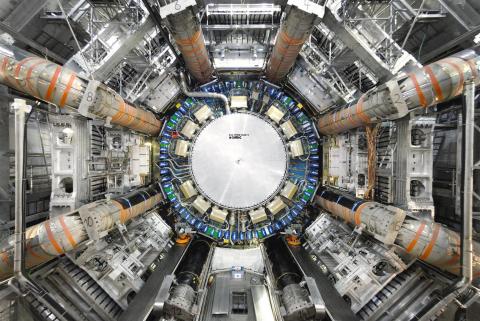

The LHC is designed to collide bunches of protons every 25 ns, i.e., at a 40 MHz rate (40 million/second). In each of these collisions, something happens. Since there is no way we can collect data at this rate, we try to pick only the interesting events, which occur very infrequently; however, this is easier said than done. Experiments like ATLAS employ a very sophisticated filtering system to keep only those events that we are interested in. This is called the trigger system, and it works because the interesting events have unique signatures that can be used to distinguish them from the uninteresting ones.

From 0-60 in 10 million seconds! – Part 2

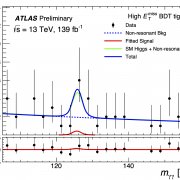

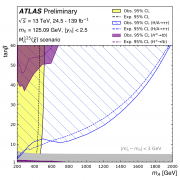

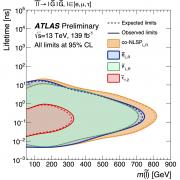

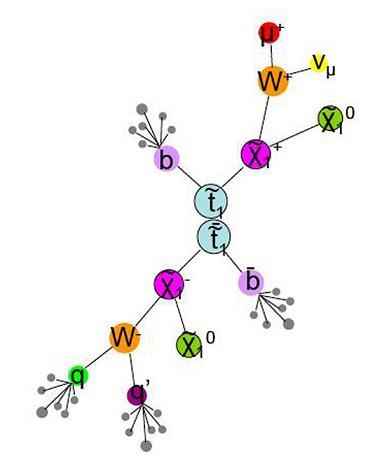

This is continuing from the previous post, where I discussed how we convert data collected by ATLAS into usable objects. Here I explain the steps to get a Physics result. I can now use our data sample to prove/disprove the predictions of Supersymmetry (SUSY), string theory or what have you. What steps do I follow?

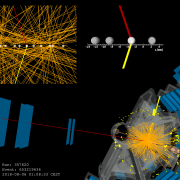

From 0-60 in 10 million seconds! – Part 1

OK, so I’ll try to give a flavour of how the data that we collect gets turned into a published result. As the title indicates, it takes a while! The post got very long, so I have split it in two parts. The first will talk about reconstructing data, and the second will explain the analysis stage.

7 or 8 TeV, a thousand terabyte question!

A very happy new year to the readers of this blog. As we start 2012, hoping to finally find the elusive Higgs boson and other signatures of new physics, an important question needs to be answered first - are we going to have collisions at a center of mass energy of 7 or 8 TeV?

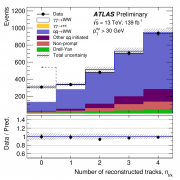

Top down: Reflections on a long and sleepless analysis journey

For the last months (which feel like years…) I’ve been working, within a small group of people, on the precision measurement of the top quark pair production cross section, and if you think that sounds complicated – the German word is “Top-Quark-Paarproduktionswechselwirkungsquerschnitt”.

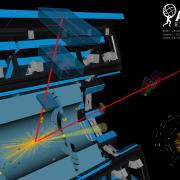

Dress Rehearsal for ATLAS debut

Dave Charlton and his team have a mammoth job on their hands; Charlton has been tasked with coordinating the Full Dress Rehearsal (FDR) of the computing and data analysis processes of the ATLAS experiment, a run–through which he describes as "essential, almost as much as ensuring the detector itself actually works".

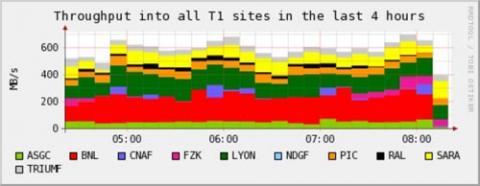

ATLAS copies its first PetaByte out of CERN

On 6th August ATLAS reached a major milestone for its Distributed Data Management project - copying its first PetaByte (1015 Bytes) of data out from CERN to computing centers around the world. This achievement is part of the so-called 'Tier-0 exercise' running since 19th June, where simulated fake data is used to exercise the expected data flow within the CERN computing centre and out over the Grid to the Tier-1 computing centers as would happen during the real data taking.